|

|

For

participants only. Not for public distribution.

|

Note #5

John Nagle |

(Preliminary, undergoing revision.)

Stereo vision process produces depth information from multiple images. Here's what I know about the subject, and what we need to do to make it work.

There are two types of stereo vision involved here - binocular stereo, where images from two cameras are compared, and motion stereo, where frames from successive images of a moving are compared. Both are needed.

Binocular stereo

Binocular stereo works at short ranges, and whether the vehicle is still or moving. But unless the separation between the cameras is huge, doesn't work well beyond roughly 20x-40x the distance between the cameras. The Point Grey Bumblebee binocular stereo system is a small unit with a 12cm baseline. Realistically, this yields a range of 2-5 meters. So we can't go very fast with that binocular stereo device alone.

The obvious answer is to place the cameras further apart, perhaps the whole width of the vehicle. This creates an alignment problem. The mounting of the cameras would need to be very rigid. (John Walker and I encountered a multicamera scanner in the1980s which dealt with this problem by brute force, mounting the cameras on an Invar I-beam.) That probably wouldn't work for us. The alignment precision required is about half a pixel, which, for a 50 degree field of view 640 x 480 pixel camera, is about 2 minutes of arc. In an offroad vehicle, that's hopeless over a 2-meter distance.

That's not how humans do it. Humans drive on motion stereo.

Motion stereo

For motion stereo, the baseline is the distance moved between one frame and the next. Thus, the faster you go, the further you can see.

Again, we have an alignment problem, and a tough one. We need a very good estimate of the camera move from one frame to the next. We can get an approximate alignment from inertial sensors, but we'll have to align more precisely by visual means.

(Need references)

Limitations of depth from motion stereo

Motion stereo would seem sufficient to create a depth map of the environment. But the view of the road ahead seen through the windshield is less useful for depth perception than one would expect. Small errors in depth measurement result in much larger errors in height, due to the oblique viewpoint. This effect gets worse with range. It's not clear how much range one can get with motion stereo.

Optical flow

"Optical flow" images can be thought of as a set of vectors, one per pixel, representing the motion from one image to another. Image sequences that show uniform optical flow indicate a smooth surface. This alone may be enough to indicate whether the terrain ahead is easily traversed. ***MORE***

Image processing

The first step in processing is to rectify the image, that is, make it rectangular so that all straight lines are straight.. The goal is to generate the image a pinhole camera would produce. This is computationally expensive and requires a calibration process for each camera. But it's done routinely in the Point Grey software and elsewhere.

The next step is image correlation. ***MORE***

Available systems

Several off-the-shelf depth-from-stereo systems are available.

Point Grey Research

Point Grey has been selling 2 and 3 camera stereo vision systems for years. Right now (December, 2002), they can't deliver anything, because Sony isn't delivering the imageing chips they need. Their stereo system is simple and straightforward. It's so simple it may have trouble in difficult environments. Their main application is "people tracking" in crowds, using overhead cameras.

Tyzx

Tyzx, despite considerable press hype about their being the low-cost solution, isn't actually shipping. A call to them resulted in an callback from their CEO, who admitted that all they really had available was a $25,000 prototype system. Their main application is also "people tracking", whch apparently is viewed in computer vision as a way to make money on "homeland security". Their technology is actually about a decade old, but they've only recently obtained funding. Unfortunately, the funding is from Paul Allen, usually a bad sign.

SRI Small Image Toolkit / Videre

SRI has a Small Image Toolkit, which can supposedly be purchased from Videre for $300. Videre also sells matching cameras, including a $3000 two-camera pair 23cm apart. Videre claims to be able to obtain useful depth from that unit out to 50 meters. This is impressive, if true.

Principles of Computer System Design for Stereo Perception (a recent CMU Robotics lab tech report) contains an evaluation of this system, with pictures.

|

|

Image

of Arctic rock field |

They report acceptable performace for robot navigation on images like the one above. Unfortunately, they don't provide both halves of the stereo pair, so we can't reprocess the images ourselves. This system is worth a serious evaluation.

Uncalibrated Stereo by Singular Value Decomposition

|

|

|

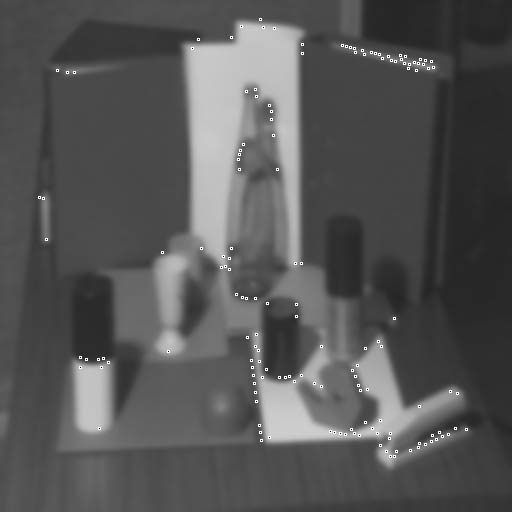

"Corners"

extracted with corner

extractor in SVD stereo package. (Note low quality of corner extraction.) Click for larger image. |

This is a classic approach to stereo - recognize "features", and match them up. That's where computer vision started, about 30 years ago. It's usually less effective than brute-force correlation. But it has its uses.

The big advantage of feature matching is that features can be matched over a sizable distance so that camera alignment isn't critical. The big disadvantage is that finding "features" usually doesn't work well.

This is probably more useful as a camera calibration technique than as a primary stereo algorithm. But it's worth having, since it lets us align cameras automatically on any scene with some corners in it. Once alignment information is obtained, it can be used to preprocess the images before sending them to a correlator. This should be especially effective in dealing with cameras which are misaligned rotationally. If we have something in the field of view at a known distance, like a hood ornament, we can probably have fully automatic alignment.

The author of this paper, who's at HP in Bristol, UK, sent me his code, which I've run. The image on the right above was created with that code, run on a QNX machine. The code runs both on Windows (compiled with MSVC 6.0) and now, on QNX, compiled with gcc. It finds slightly different features on the two platforms, which is disconcerting, and I'm trying to figure out why.

Chabat/Yang/Hansell corner detector

|

This 1999 paper covers a new algorithm for finding "corners" in an image. This one looks much better than the one in the "stereo by SVD" code. But there's no immediately available code for it. |

2001 Workshop on Stereo Vision

In 2001, a conference on stereo vision was held in Hawaii. The papers provide a good overview of the different approaches. Most of the systems mentioned here were represented.

Open Source Computer Vision Library

This is an Intel project that's now on SourceForge. I'm just starting to look at it. It has a stereo matcher, said to be real-time on Intel CPUs. (The code can use some Intel-only features, if desired).

This library looks promising as an architectural base, since it provides a wide range of image processing functions, built on common data structures. More on this later.