|

|

For

participants only. Not for public distribution.

|

Note #29

John Nagle |

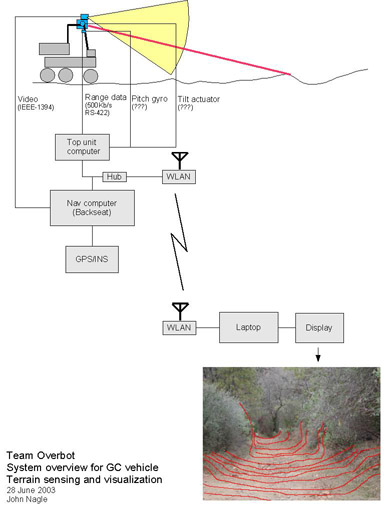

Here, in one place, is a summary of the system design.

|

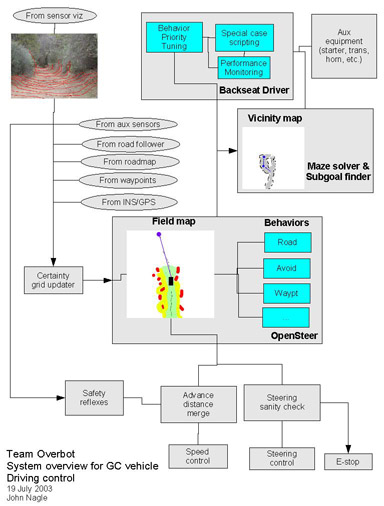

DrivingThis subsystem makes driving decisions. Most steering is done by the OpenSteer system, using input data from the sensor visualization subsystem. OpenSteer is a field-based steering control system - the vehicle is attracted to the next waypoint, attracted to the road location, and repelled by obstacles and untraversable ground. OpenSteer can't handle dead ends. The "backseat driver" monitors the performance of OpenSteer, and when it's stuck, inserts subgoals to take the vehicle in a different direction. The "backseat driver" has a vicinity map of what the vehicle has seen recently, and access to a a path planner that can solve simple mazes. Outputs from OpenSteer are monitored by low-level safety and sanity checks, which use sensor data (primarily the VORAD radar and the sonars) to veto actions from the steering system. This system's performance can be monitored by watching the field map and the vicinity map. |

Notes:

- The "certainty grid updater" area needs more detail.

- There are ways to use field maps to control non-holonomic robots (where turning radius is limited), but we need to look into that problem further.

- OpenSteer will probably require substantial modification.